The Brainteaser dataset

This is a dataset concurrently released with our NeurIPS 2025 paper [Code].

Citation

If you use this dataset, please cite our paper:

@article{han2025creativity,

title={Creativity or Brute Force? Using Brainteasers as a Window into the Problem-Solving Abilities of Large Language Models},

author={Han, Simeng and Xia, Stephen and Zhang, Grant and Dai, Howard and Liu, Chen and Chen, Lichang and Nguyen, Hoang Huy and Mei, Hongyuan and Mao, Jiayuan and McCoy, R. Thomas},

journal={Advances in neural information processing systems},

year={2025}

}

Highlights

We introduce a novel benchmark dataset, BRAINTEASER, which uses brainteasers to evaluate the reasoning abilities of LLMs.

The Math and Logic datasets were curated by scraping problem-solving and reasoning questions from the Braingle website, an online platform of puzzles and brain teasers.

Authored by expert problem solvers, BRAINTEASER features diverse puzzle styles and complexities, aiming to isolate models’ reasoning abilities rather than their memorization of formulas.

BRAINTEASER is exclusively centered on mathematical and logical puzzles, all authored by expert problem solvers with demonstrated proficiency across a wide range of puzzle types.

As quality control, we conducted one round of manual inspection of all problems in BRAINTEASER done by college students who belong to a math club; these students have extensive experience in solving competition-level math problems.

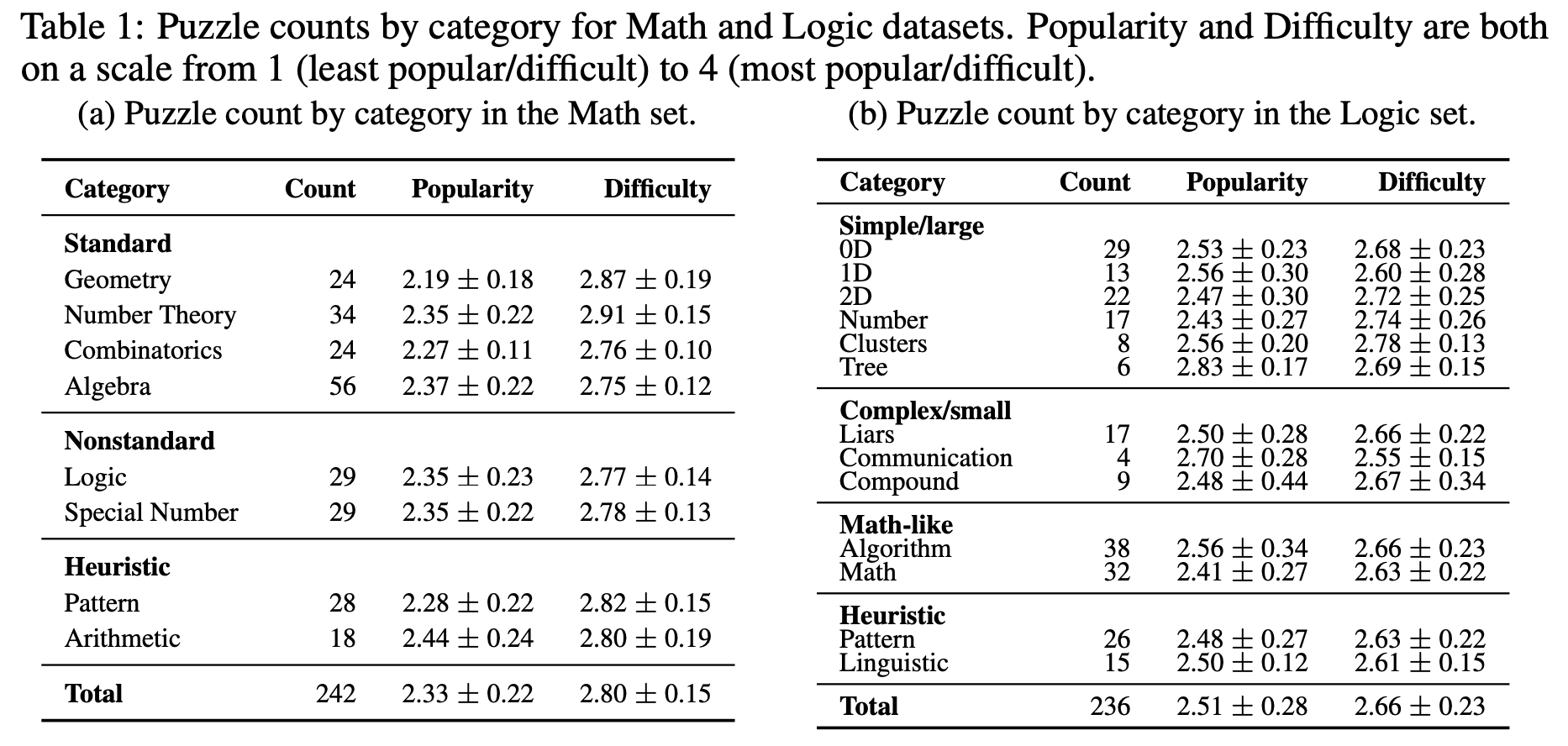

During manual inspection, low-quality and ambiguously described problems were removed, leaving 242 Math and 236 Logic problems in the dataset. The same annotators also manually created hints for problems that originally lacked them.

The same annotators assigned cateogries and subcategories to all puzzles (see Table 1).

Dataset composition

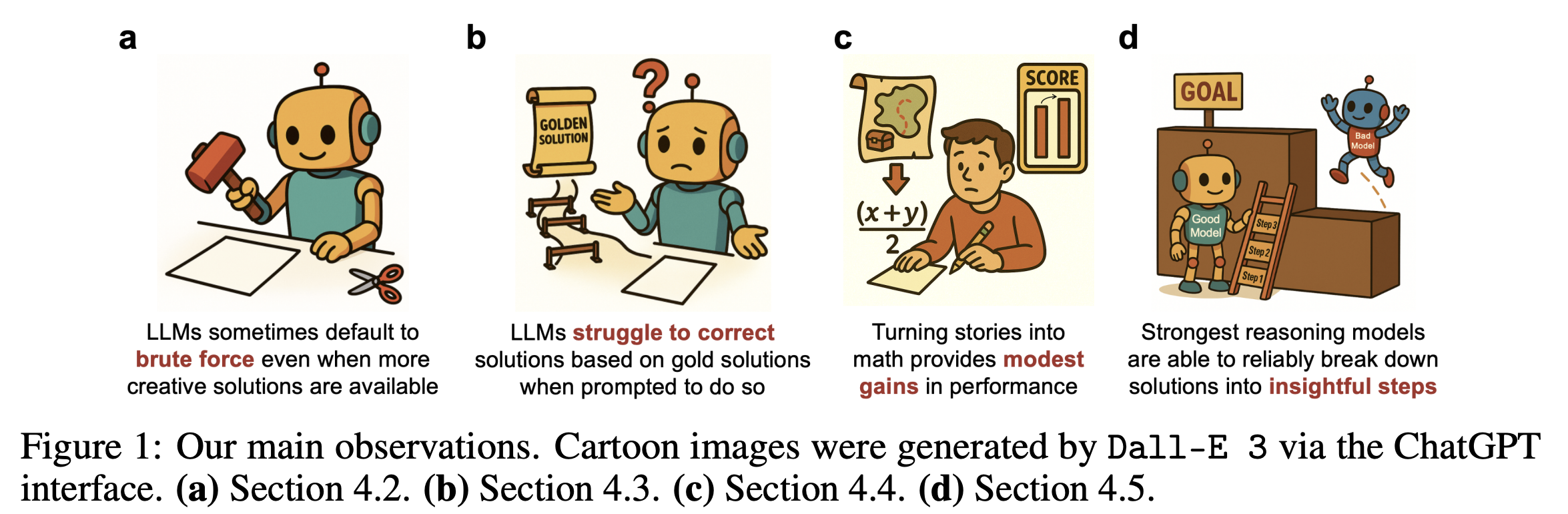

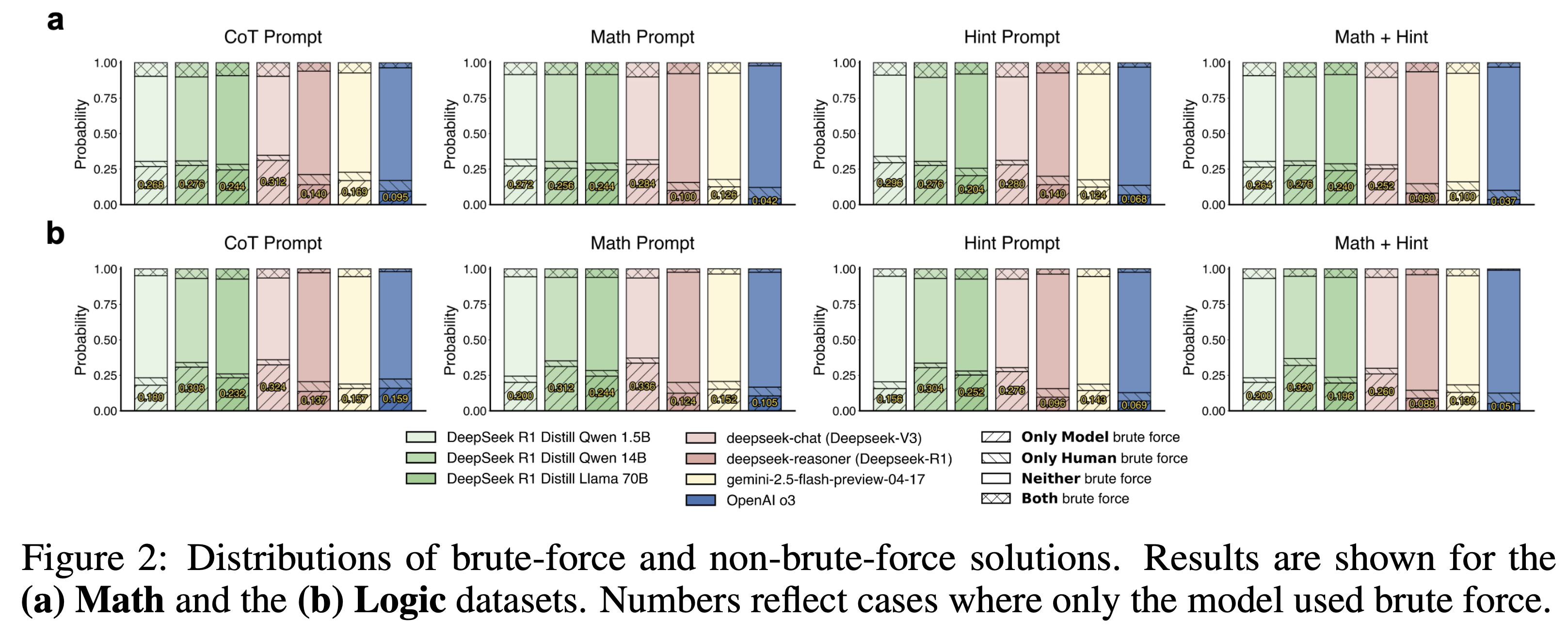

Main observations

Creativity vs. Brute Force

- Downloads last month

- 23