Update README.md

Browse files

README.md

CHANGED

|

@@ -7,52 +7,9 @@ license: mit

|

|

| 7 |

pipeline_tag: text-generation

|

| 8 |

---

|

| 9 |

|

| 10 |

-

|

| 11 |

|

| 12 |

-

|

| 13 |

-

<img src=https://raw.githubusercontent.com/zai-org/GLM-4.5/refs/heads/main/resources/logo.svg width="15%"/>

|

| 14 |

-

</div>

|

| 15 |

-

<p align="center">

|

| 16 |

-

👋 Join our <a href="https://discord.gg/QR7SARHRxK" target="_blank">Discord</a> community.

|

| 17 |

-

<br>

|

| 18 |

-

📖 Check out the GLM-4.6 <a href="https://z.ai/blog/glm-4.6" target="_blank">technical blog</a>, <a href="https://arxiv.org/abs/2508.06471" target="_blank">technical report(GLM-4.5)</a>, and <a href="https://zhipu-ai.feishu.cn/wiki/Gv3swM0Yci7w7Zke9E0crhU7n7D" target="_blank">Zhipu AI technical documentation</a>.

|

| 19 |

-

<br>

|

| 20 |

-

📍 Use GLM-4.6 API services on <a href="https://docs.z.ai/guides/llm/glm-4.6">Z.ai API Platform. </a>

|

| 21 |

-

<br>

|

| 22 |

-

👉 One click to <a href="https://chat.z.ai">GLM-4.6</a>.

|

| 23 |

-

</p>

|

| 24 |

|

| 25 |

-

|

| 26 |

|

| 27 |

-

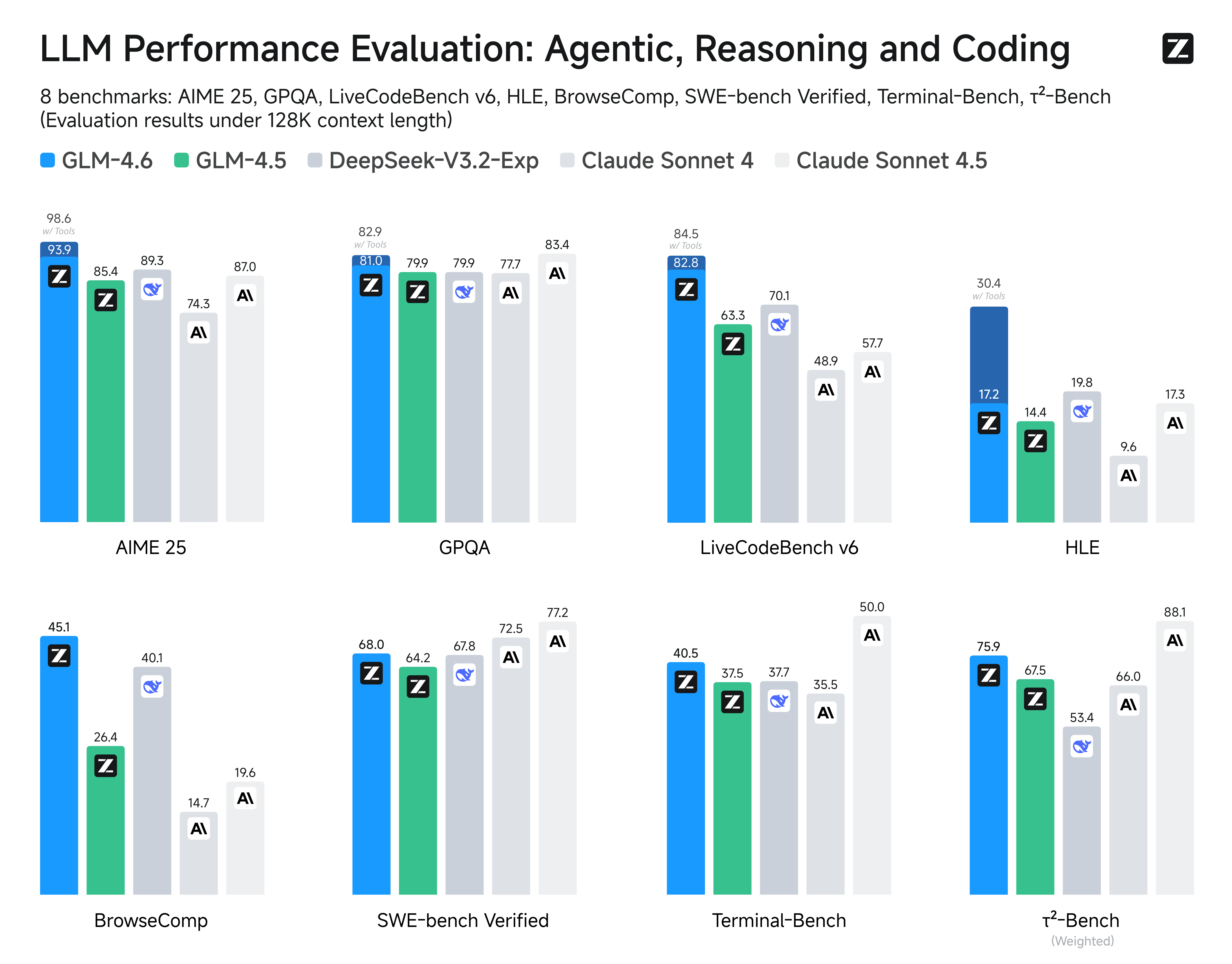

Compared with GLM-4.5, **GLM-4.6** brings several key improvements:

|

| 28 |

-

|

| 29 |

-

* **Longer context window:** The context window has been expanded from 128K to 200K tokens, enabling the model to handle more complex agentic tasks.

|

| 30 |

-

* **Superior coding performance:** The model achieves higher scores on code benchmarks and demonstrates better real-world performance in applications such as Claude Code、Cline、Roo Code and Kilo Code, including improvements in generating visually polished front-end pages.

|

| 31 |

-

* **Advanced reasoning:** GLM-4.6 shows a clear improvement in reasoning performance and supports tool use during inference, leading to stronger overall capability.

|

| 32 |

-

* **More capable agents:** GLM-4.6 exhibits stronger performance in tool using and search-based agents, and integrates more effectively within agent frameworks.

|

| 33 |

-

* **Refined writing:** Better aligns with human preferences in style and readability, and performs more naturally in role-playing scenarios.

|

| 34 |

-

|

| 35 |

-

We evaluated GLM-4.6 across eight public benchmarks covering agents, reasoning, and coding. Results show clear gains over GLM-4.5, with GLM-4.6 also holding competitive advantages over leading domestic and international models such as **DeepSeek-V3.1-Terminus** and **Claude Sonnet 4**.

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

## Inference

|

| 40 |

-

|

| 41 |

-

**Both GLM-4.5 and GLM-4.6 use the same inference method.**

|

| 42 |

-

|

| 43 |

-

you can check our [github](https://github.com/zai-org/GLM-4.5) for more detail.

|

| 44 |

-

|

| 45 |

-

## Recommended Evaluation Parameters

|

| 46 |

-

|

| 47 |

-

For general evaluations, we recommend using a **sampling temperature of 1.0**.

|

| 48 |

-

|

| 49 |

-

For **code-related evaluation tasks** (such as LCB), it is further recommended to set:

|

| 50 |

-

|

| 51 |

-

- `top_p = 0.95`

|

| 52 |

-

- `top_k = 40`

|

| 53 |

-

|

| 54 |

-

|

| 55 |

-

## Evaluation

|

| 56 |

-

|

| 57 |

-

- For tool-integrated reasoning, please refer to [this doc](https://github.com/zai-org/GLM-4.5/blob/main/resources/glm_4.6_tir_guide.md).

|

| 58 |

-

- For search benchmark, we design a specific format for searching toolcall in thinking mode to support search agent, please refer to [this](https://github.com/zai-org/GLM-4.5/blob/main/resources/trajectory_search.json). for the detailed template.

|

|

|

|

| 7 |

pipeline_tag: text-generation

|

| 8 |

---

|

| 9 |

|

| 10 |

+

2.33 bpw EXL3 quant of [GLM-4.6](https://huggingface.co/zai-org/GLM-4.6)

|

| 11 |

|

| 12 |

+

Base quants [provided by MikeRoz](https://huggingface.co/MikeRoz/GLM-4.6-exl3)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

| 14 |

+

This is just a quick mix of the 2.25 bpw quant with attention, dense layers and shared experts in 4.0 bpw.

|

| 15 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|