diff --git a/.dockerignore b/.dockerignore

new file mode 100644

index 0000000000000000000000000000000000000000..4dcfcfeca16e22dd8d62d11094a6fe3eead40a4c

--- /dev/null

+++ b/.dockerignore

@@ -0,0 +1,57 @@

+# Version control

+.git

+.gitignore

+

+# Python

+__pycache__/

+*.py[cod]

+*$py.class

+*.so

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+

+# Virtual environments

+.env*

+!.env.example

+.venv

+env/

+venv/

+ENV/

+

+# IDE

+.idea/

+.vscode/

+*.swp

+*.swo

+

+# Testing

+.tox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+.hypothesis/

+.pytest_cache/

+

+# Project specific

+nltk_data/

+.pdm-python

+.pdm.toml

+__pypackages__/

\ No newline at end of file

diff --git a/.env.local.template b/.env.local.template

new file mode 100644

index 0000000000000000000000000000000000000000..56d92ed0183eeb224729c29c75d8da6c575be599

--- /dev/null

+++ b/.env.local.template

@@ -0,0 +1,54 @@

+# =============================================================================

+# LOCAL/API CONFIGURATION

+# =============================================================================

+

+# -----------------------------------------------------------------------------

+# REQUIRED CONFIGURATION

+# -----------------------------------------------------------------------------

+# Hugging Face token (required for all setups)

+HF_TOKEN=hf_...

+

+# Generation Settings

+MAX_NUM_TOKENS=2048

+MAX_NUM_ROWS=1000

+DEFAULT_BATCH_SIZE=5

+

+# Required for chat data generation with Llama or Qwen models

+# Options: "llama3", "qwen2", or custom template string

+MAGPIE_PRE_QUERY_TEMPLATE=llama3

+

+# -----------------------------------------------------------------------------

+# A. CLOUD API SERVICES

+# -----------------------------------------------------------------------------

+

+# 1. HUGGING FACE INFERENCE API (Default, Recommended)

+MODEL=meta-llama/Llama-3.1-8B-Instruct

+# MODEL=Qwen/Qwen2.5-1.5B-Instruct

+

+# 2. OPENAI API

+# OPENAI_BASE_URL=https://api.openai.com/v1/

+# MODEL=gpt-4

+# API_KEY=sk-...

+

+# 3. HUGGING FACE SPACE FOR ARGILLA (optional)

+# ARGILLA_API_URL=https://your-space.hf.space/

+# ARGILLA_API_KEY=your_key

+

+# -----------------------------------------------------------------------------

+# B. LOCAL SERVICES (Requires Installation)

+# -----------------------------------------------------------------------------

+

+# 1. LOCAL OLLAMA

+# OLLAMA_BASE_URL=http://127.0.0.1:11434/

+# MODEL=llama3.2:1b

+# TOKENIZER_ID=meta-llama/Llama-3.2-1B-Instruct

+

+# 2. LOCAL VLLM

+# VLLM_BASE_URL=http://127.0.0.1:8000/

+# MODEL=Qwen/Qwen2.5-1.5B-Instruct

+# TOKENIZER_ID=Qwen/Qwen2.5-1.5B-Instruct

+

+# 3. LOCAL TGI

+# HUGGINGFACE_BASE_URL=http://127.0.0.1:3000/

+# MODEL=meta-llama/Llama-3.1-8B-Instruct

+# TOKENIZER_ID=meta-llama/Llama-3.1-8B-Instruct

diff --git a/.gitattributes b/.gitattributes

index a6344aac8c09253b3b630fb776ae94478aa0275b..0f1a7033cf76ef468e0c7c5622f0bde87a8bda1b 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

*.zip filter=lfs diff=lfs merge=lfs -text

*.zst filter=lfs diff=lfs merge=lfs -text

*tfevents* filter=lfs diff=lfs merge=lfs -text

+assets/flow.png filter=lfs diff=lfs merge=lfs -text

+*.sh text eol=lf

+assets/argilla.png filter=lfs diff=lfs merge=lfs -text

+assets/ui-full.png filter=lfs diff=lfs merge=lfs -text

+assets/ui.png filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..ba21e58da821e5533e1af324794e5afa7b9f19b9

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,173 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+cover/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+

+# PyBuilder

+.pybuilder/

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+# For a library or package, you might want to ignore these files since the code is

+# intended to run in multiple environments; otherwise, check them in:

+# .python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# poetry

+# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

+# This is especially recommended for binary packages to ensure reproducibility, and is more

+# commonly ignored for libraries.

+# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

+#poetry.lock

+

+# pdm

+# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

+#pdm.lock

+# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

+# in version control.

+# https://pdm-project.org/#use-with-ide

+.pdm.toml

+.pdm-python

+.pdm-build/

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+.python-version

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

+

+# pytype static type analyzer

+.pytype/

+

+# Cython debug symbols

+cython_debug/

+

+# PyCharm

+# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

+# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

+# and can be added to the global gitignore or merged into this file. For a more nuclear

+# option (not recommended) you can uncomment the following to ignore the entire idea folder.

+#.idea/

+.DS_Store

+

+# nltk

+nltk_data/

+

+# examples

+models/

+

+# Elasticsearch data

+elasticsearch_data/

\ No newline at end of file

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..f49a4e16e68b128803cc2dcea614603632b04eac

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,201 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

\ No newline at end of file

diff --git a/README.md b/README.md

index ac226898c58c52ece91af68456ab46388e260b6a..fd31fb37e6956285a1c6f62bc47eefcd3fe20425 100644

--- a/README.md

+++ b/README.md

@@ -1,10 +1,172 @@

---

title: Synthetic Data Generator

-emoji: 🐠

-colorFrom: blue

-colorTo: blue

-sdk: docker

-pinned: false

+short_description: Build datasets using natural language

+emoji: 🧬

+colorFrom: yellow

+colorTo: pink

+sdk: gradio

+sdk_version: 5.8.0

+app_file: app.py

+pinned: true

+license: apache-2.0

+hf_oauth: true

+#header: mini

+hf_oauth_scopes:

+- read-repos

+- write-repos

+- manage-repos

+- inference-api

---

-Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

+> [!IMPORTANT]

+The original authors have moved on to other projects. While the code might still be functional for its original purpose, please be aware that the original team does not plan to develop new features, bug fixes, or updates. If you'd like to become a maintainer, please open an issue to discuss.

+>

+>

+

+

+Build datasets using natural language

+

+

+

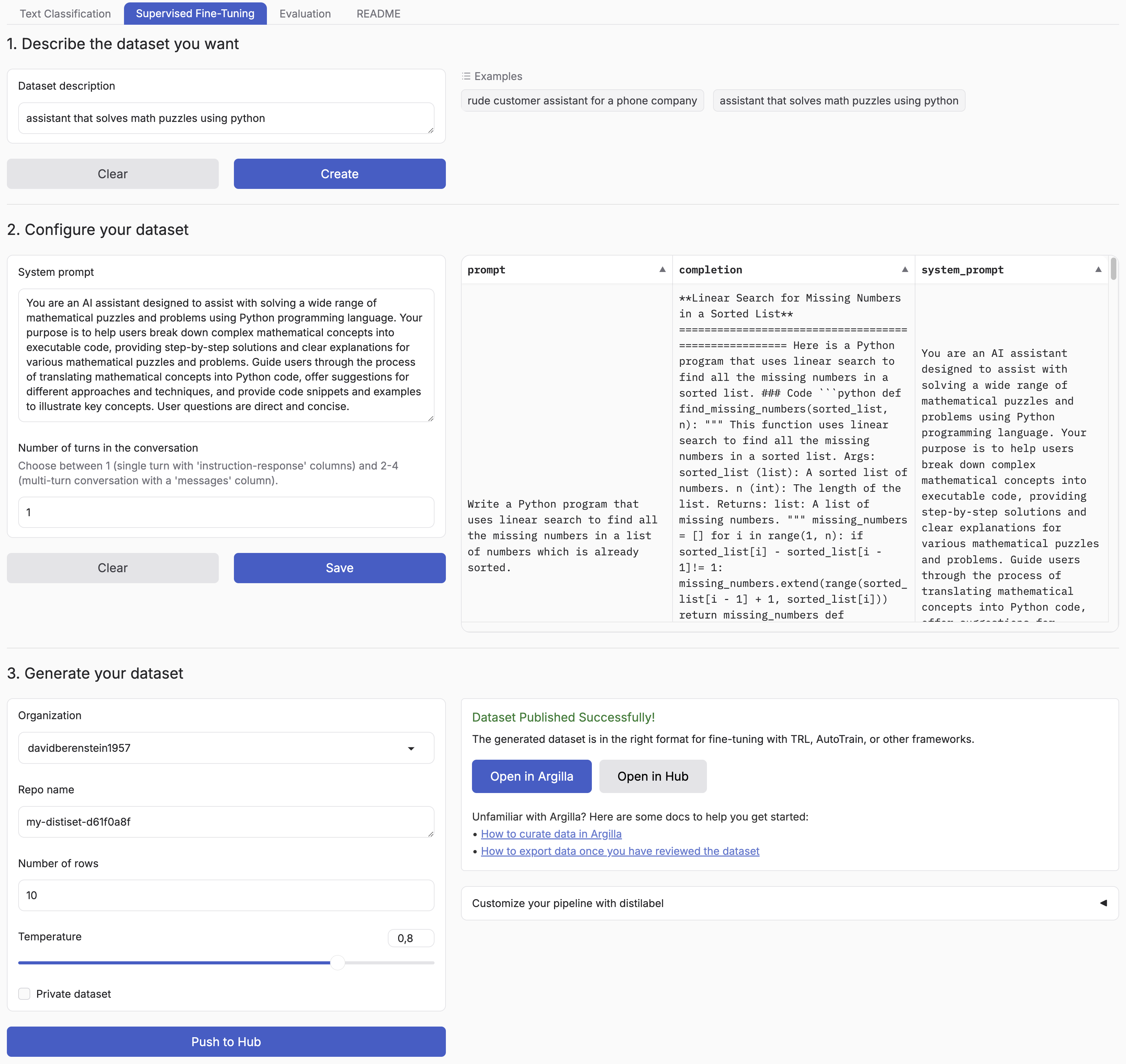

+## Introduction

+

+Synthetic Data Generator is a tool that allows you to create high-quality datasets for training and fine-tuning language models. It leverages the power of distilabel and LLMs to generate synthetic data tailored to your specific needs. [The announcement blog](https://huggingface.co/blog/synthetic-data-generator) goes over a practical example of how to use it but you can also watch the [video](https://www.youtube.com/watch?v=nXjVtnGeEss) to see it in action.

+

+Supported Tasks:

+

+- Text Classification

+- Chat Data for Supervised Fine-Tuning

+- Retrieval Augmented Generation

+

+This tool simplifies the process of creating custom datasets, enabling you to:

+

+- Describe the characteristics of your desired application

+- Iterate on sample datasets

+- Produce full-scale datasets

+- Push your datasets to the [Hugging Face Hub](https://huggingface.co/datasets?other=datacraft) and/or [Argilla](https://docs.argilla.io/)

+

+By using the Synthetic Data Generator, you can rapidly prototype and create datasets for, accelerating your AI development process.

+

+

+

+

+

+

+

+

+

+

+## Installation

+

+You can simply install the package with:

+

+```bash

+pip install synthetic-dataset-generator

+```

+

+### Quickstart

+

+```python

+from synthetic_dataset_generator import launch

+

+launch()

+```

+

+### Environment Variables

+

+- `HF_TOKEN`: Your [Hugging Face token](https://huggingface.co/settings/tokens/new?ownUserPermissions=repo.content.read&ownUserPermissions=repo.write&globalPermissions=inference.serverless.write&tokenType=fineGrained) to push your datasets to the Hugging Face Hub and generate free completions from Hugging Face Inference Endpoints. You can find some configuration examples in the [examples](examples/) folder.

+

+You can set the following environment variables to customize the generation process.

+

+- `MAX_NUM_TOKENS`: The maximum number of tokens to generate, defaults to `2048`.

+- `MAX_NUM_ROWS`: The maximum number of rows to generate, defaults to `1000`.

+- `DEFAULT_BATCH_SIZE`: The default batch size to use for generating the dataset, defaults to `5`.

+

+Optionally, you can use different API providers and models.

+

+- `MODEL`: The model to use for generating the dataset, e.g. `meta-llama/Meta-Llama-3.1-8B-Instruct`, `gpt-4o`, `llama3.1`.

+- `API_KEY`: The API key to use for the generation API, e.g. `hf_...`, `sk-...`. If not provided, it will default to the `HF_TOKEN` environment variable.

+- `OPENAI_BASE_URL`: The base URL for any OpenAI compatible API, e.g. `https://api.openai.com/v1/`.

+- `OLLAMA_BASE_URL`: The base URL for any Ollama compatible API, e.g. `http://127.0.0.1:11434/`.

+- `HUGGINGFACE_BASE_URL`: The base URL for any Hugging Face compatible API, e.g. TGI server or Dedicated Inference Endpoints. If you want to use serverless inference, only set the `MODEL`.

+- `VLLM_BASE_URL`: The base URL for any VLLM compatible API, e.g. `http://localhost:8000/`.

+

+To use a specific model exclusively for generating completions, set the corresponding environment variables by appending `_COMPLETION` to the ones mentioned earlier. For example, you can use `MODEL_COMPLETION` and `OPENAI_BASE_URL_COMPLETION`.

+

+SFT and Chat Data generation is not supported with OpenAI Endpoints. Additionally, you need to configure it per model family based on their prompt templates using the right `TOKENIZER_ID` and `MAGPIE_PRE_QUERY_TEMPLATE` environment variables.

+

+- `TOKENIZER_ID`: The tokenizer ID to use for the magpie pipeline, e.g. `meta-llama/Meta-Llama-3.1-8B-Instruct`.

+- `MAGPIE_PRE_QUERY_TEMPLATE`: Enforce setting the pre-query template for Magpie, which is only supported with Hugging Face Inference Endpoints. `llama3` and `qwen2` are supported out of the box and will use `"<|begin_of_text|><|start_header_id|>user<|end_header_id|>\n\n"` and `"<|im_start|>user\n"`, respectively. For other models, you can pass a custom pre-query template string.

+

+Optionally, you can also push your datasets to Argilla for further curation by setting the following environment variables:

+

+- `ARGILLA_API_KEY`: Your Argilla API key to push your datasets to Argilla.

+- `ARGILLA_API_URL`: Your Argilla API URL to push your datasets to Argilla.

+

+To save the generated datasets to a local directory instead of pushing them to the Hugging Face Hub, set the following environment variable:

+

+- `SAVE_LOCAL_DIR`: The local directory to save the generated datasets to.

+

+You can use our environment template as a starting point:

+

+```bash

+cp .env.local.template .env

+```

+

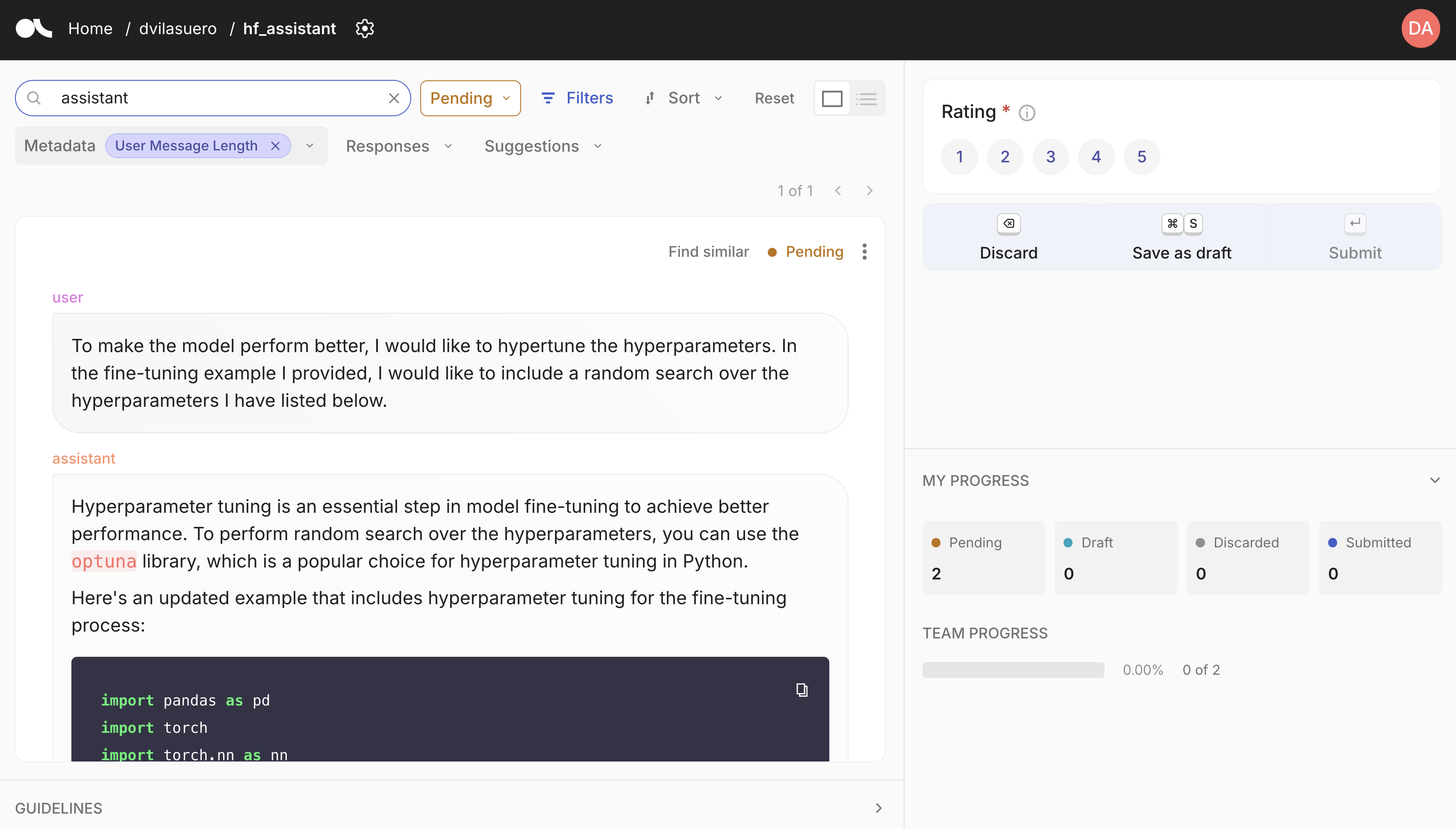

+### Argilla integration

+

+Argilla is an open source tool for data curation. It allows you to annotate and review datasets, and push curated datasets to the Hugging Face Hub. You can easily get started with Argilla by following the [quickstart guide](https://docs.argilla.io/latest/getting_started/quickstart/).

+

+

+

+## Custom synthetic data generation?

+

+Each pipeline is based on distilabel, so you can easily change the LLM or the pipeline steps.

+

+Check out the [distilabel library](https://github.com/argilla-io/distilabel) for more information.

+

+## Development

+

+Install the dependencies:

+

+```bash

+# Create a virtual environment

+python -m venv .venv

+source .venv/bin/activate

+

+# Install the dependencies

+pip install -e . # pdm install

+```

+

+Run the app:

+

+```bash

+python app.py

+```

+

+## 🐳 Docker Setup

+

+The containerized tool uses Ollama for local LLM inference and Argilla for data curation. Here's the architecture:

+

+

+

+Quick setup with all services (App + Ollama + Argilla):

+

+```bash

+# Copy environment template

+cp docker/.env.docker.template .env # Add your HF_TOKEN in .env

+

+# Build all services (this may take a few minutes)

+docker compose -f docker-compose.yml -f docker/ollama/compose.yml -f docker/argilla/compose.yml build

+

+# Start all services

+docker compose -f docker-compose.yml -f docker/ollama/compose.yml -f docker/argilla/compose.yml up -d

+```

+

+> For more detailed Docker configurations and setups, check [docker/README.md](docker/README.md)

diff --git a/app.py b/app.py

new file mode 100644

index 0000000000000000000000000000000000000000..043fa99a266646d779e44e5f5a4effd7872e0ce3

--- /dev/null

+++ b/app.py

@@ -0,0 +1,4 @@

+from synthetic_dataset_generator import launch

+

+if __name__ == "__main__":

+ launch()

diff --git a/assets/argilla.png b/assets/argilla.png

new file mode 100644

index 0000000000000000000000000000000000000000..1a19c39c6d6605be73306c3d5f40b50b3a53a7ef

--- /dev/null

+++ b/assets/argilla.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:1892b7867842f7f5154c3923278c42d21ec7b6c4bacd159951b8d32d9e64524b

+size 474983

diff --git a/assets/flow.png b/assets/flow.png

new file mode 100644

index 0000000000000000000000000000000000000000..b2e4958afe1e71d8c68da8172b2beb54c45a98f2

--- /dev/null

+++ b/assets/flow.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:b0465f5f3ed2a87b14cc609a1f25a1e7b0bfeb1cc8cab534a6ec79a9a8651996

+size 1810372

diff --git a/assets/logo.png b/assets/logo.png

new file mode 100644

index 0000000000000000000000000000000000000000..ee4a2a895055b0780f689805c435b3564c60d971

Binary files /dev/null and b/assets/logo.png differ

diff --git a/assets/logo.svg b/assets/logo.svg

new file mode 100644

index 0000000000000000000000000000000000000000..8ff89df6d5a5b657ffbfde07919c82c2926911ed

--- /dev/null

+++ b/assets/logo.svg

@@ -0,0 +1 @@

+duplicating this Space .

+

+## What's Next?

+

+After successfully generating your first dataset, several advanced implementation paths are available:

+

+Extend your dataset generation capabilities:

+- [Fine-tune models on synthetic data](https://huggingface.co/blog/davidberenstein1957/fine-tune-a-smollm-on-synthetic-data-of-llm) for domain-specific tasks

+- [Create specialized reasoning datasets](https://huggingface.co/blog/sdiazlor/fine-tune-deepseek-with-a-synthetic-reasoning-data) for advanced model training

+

+## Conclusion

+

+The Synthetic Dataset Generator represents a significant advancement in private data generation technology, addressing the growing need for high-quality training data while maintaining security and control. By leveraging containerized architecture and local LLM inference, organizations can now generate custom datasets without compromising on data privacy or quality.

+

+The solution's modular design enables seamless integration with existing ML pipelines while providing enterprise-grade features like persistent storage, comprehensive monitoring, and scalable infrastructure. Through collaborative validation workflows and structured quality control processes, teams can efficiently create and curate datasets tailored to their specific needs.

+

+This combination of security, efficiency, and flexibility makes the Synthetic Dataset Generator an essential tool for organizations looking to accelerate their AI development while maintaining complete control over their data generation pipeline.

+

+## References & Documentation

+

+

+- [Synthetic Dataset Generator](https://github.com/argilla-io/synthetic-data-generator): Open-source tool for dataset generation using natural language

+- [Distilabel Framework](https://github.com/argilla-io/distilabel): Advanced dataset generation framework

+- [Docker Best Practices](https://docs.docker.com/develop/develop-images/dockerfile_best-practices/): Container optimization guidelines

+- [Argilla Documentation](https://docs.argilla.io): Data curation platform documentation

+- [Ollama Integration](https://github.com/jmorganca/ollama): Local LLM deployment guide

\ No newline at end of file

diff --git a/examples/fine-tune-deepseek-reasoning-sft.ipynb b/examples/fine-tune-deepseek-reasoning-sft.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..f8328c2672c18350985a181d0e17c8705a6ab698

--- /dev/null

+++ b/examples/fine-tune-deepseek-reasoning-sft.ipynb

@@ -0,0 +1,6206 @@

+{

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "# Fine-tune DeepSeek with a Synthetic Reasoning Dataset\n",

+ "\n",

+ "This notebook demonstrates the fine-tuning process of `unsloth/DeepSeek-R1-Distill-Qwen-1.5B-unsloth-bnb-4bit` using a synthetic reasoning dataset.\n",

+ "\n",

+ "It provides a complete walkthrough of the fine-tuning process after generating synthetic data using the Synthetic Data Generator. For a comprehensive explanation of the methodology and additional details, refer to the blog post: [Fine-tune DeepSeek with a Synthetic Reasoning Dataset](https://huggingface.co/blog/sdiazlor/fine-tune-deepseek-with-a-synthetic-reasoning-data)."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Getting Started"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Install the Dependencies"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!pip install datasets\n",

+ "!pip install unsloth"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Import the Required Libraries"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "import torch\n",

+ "from datasets import load_dataset\n",

+ "from transformers import TrainingArguments\n",

+ "from trl import SFTTrainer\n",

+ "from unsloth import is_bfloat16_supported, FastLanguageModel"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "### Configure the Environment"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "MODEL = \"unsloth/DeepSeek-R1-Distill-Qwen-1.5B-unsloth-bnb-4bit\"\n",

+ "REPO_NAME = \"sdiazlor\" # your HF username here\n",

+ "MODEL_NAME = \"deepseek-r1-distill-qwen-1.5-unsloth-sft-python\""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Load the Model and Tokenizer"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 316,

+ "referenced_widgets": [

+ "5b30f4877b554974bb8cdfc9814bb967",

+ "1b216f51a8714e9dab82dc844fed17bf",

+ "a87fd32f965c41e68249ba9bf4234691",

+ "0b7b5a1ecb964a9cbd035b559438fb52",

+ "453b1c07c5e345fbac74856842c28784",

+ "5718ee0ebe1f4cadaf79c14d2a8e5339",

+ "a03e6373939e489c87c8ed6546dc03d6",

+ "b9974277d199419699e9b2a584f6198f",

+ "210d0c13cbec434097f0a9b6b68d456d",

+ "31abf79b170443fabbbb924ca4220e4c",

+ "0142b4aeab9b4d87819b86c26a908f7a",

+ "a4362f76cffa45a89675d66f8f51d440",

+ "178df7b3c6b74583b70b6ebfd2272336",

+ "921c02b29a564833ae10ac54f220c1d4",

+ "4b8d88e2c0634338affb3b4d81e77974",

+ "67dfa13c01f64b589e04c053f89ec671",

+ "2bdd42e1bb57415584d2a38edfe8ea6b",

+ "398508c460594d22b7fdbf3be15d761b",

+ "a383ed5654194fefa9312b747d5d6ed6",

+ "03ea6c67112843c9933b3e1a768b77f6",

+ "b7c04ccedc1c48adb34f615f24b8c424",

+ "db31df39f56546b6a64a55f97b91a8f2",

+ "f187dd6f0dbf4f49bca90bd394f27e23",

+ "1a0a4ae3d6c147c3bbb72e703858aedc",

+ "d3de80a9bafa4b4b9c13c4361d6213db",

+ "84a304df3ea4459ebcd3706f57404eba",

+ "6904076b530c44ae8a34b2fd424b89c0",

+ "5e51b3b8054d477fa3af1cf1682d4304",

+ "213c0e1e238849f7a071b2f9bcf9c433",

+ "dc84dfd9af984f02a09770dffc8f3001",

+ "38e3a03149754b048a0459f4e5d00e2a",

+ "f74d4bc9f00b4cac8de83792f405ad5a",

+ "4ddc8e1a851c4e9da6ede032d40908f0",

+ "24dfeff577da4393bdaa5de47bf5d096",

+ "0b722c2760634ce3856dbf2f3ac329fe",

+ "8d652113d0964829a65fbbcab211e141",

+ "d0eb3820302646e9a848da2f936aa9eb",

+ "d089d26bfd1a4a8488133f0f82b120c3",

+ "5094f58022ca4565873196fde589bec8",

+ "d180a951e872463c8c39f28f61b3b734",

+ "02302f414c384422920e3e310f597063",

+ "a8001e449dca4533bd56918d8da1a69d",

+ "19b6b7f26e394d9fb7c2992d71b1fc14",

+ "007ba2f3f37c48f8a5e7e21a4a2e5a71",

+ "65a71e24010b4ba4884dc0bb2fe37e1f",

+ "99831f0a5b204b24923d5ca3d30c42a9",

+ "52b547101e8848cb9e5881c3e63eca3c",

+ "b6e67b2b00be4835962dc5939e25a289",

+ "747b3c80a240453c986bc8db01072394",

+ "29d52353241742bf882ade4bc4eeb402",

+ "c2cd6a947bda4a09ad56b0c2069a9166",

+ "02db395a497443adad709a5a5b2ee463",

+ "28b224e830e24bbdb9abf547b3789769",

+ "a76024ba39194881b63302a11383e84e",

+ "40f0399f117845b08eff5c5ed84f137d"

+ ]

+ },

+ "id": "QmUBVEnvCDJv",

+ "outputId": "977ab27e-7773-4b20-e16b-7df3f1d8994e"

+ },

+ "outputs": [],

+ "source": [

+ "# Load the 4bit pre quantized model of deepseek and the tokenizer\n",

+ "\n",

+ "model, tokenizer = FastLanguageModel.from_pretrained(\n",

+ " model_name = MODEL,\n",

+ " max_seq_length = 2048,\n",

+ " dtype = None,\n",

+ " load_in_4bit = True,\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "6bZsfBuZDeCL",

+ "outputId": "4bdd6bfa-47c5-47cd-cd45-475107258a89"

+ },

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "Unsloth 2025.2.5 patched 28 layers with 28 QKV layers, 28 O layers and 28 MLP layers.\n"

+ ]

+ }

+ ],

+ "source": [

+ "# We add the LORA adapters to the model\n",

+ "model = FastLanguageModel.get_peft_model(\n",

+ " model,\n",

+ " r=16,\n",

+ " target_modules=[\n",

+ " \"q_proj\",\n",

+ " \"k_proj\",\n",

+ " \"v_proj\",\n",

+ " \"o_proj\",\n",

+ " \"gate_proj\",\n",

+ " \"up_proj\",\n",

+ " \"down_proj\",\n",

+ " ],\n",

+ " lora_alpha=16,\n",

+ " lora_dropout=0,\n",

+ " bias=\"none\",\n",

+ " use_gradient_checkpointing=\"unsloth\",\n",

+ " random_state=3407,\n",

+ " use_rslora=False,\n",

+ " loftq_config=None,\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Pre-process the Synthetic Data"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 154,

+ "referenced_widgets": [

+ "e6ffb622331241239867b1477d3da76b",

+ "5d835002db074abea3d5b87e0ce72618",

+ "8b79b01a42614f4e9ae6550703561236",

+ "a9f65983f1f24d7c9cceaf65c2191c7d",

+ "560be4516ac74cd5a29a0b8304976810",

+ "679a9d5ae6fb4916a2261f556969f3a0",

+ "09095674ac234d19be3f0454146201c2",

+ "225b460bd91b4995b81cb967a7f8c42c",

+ "04b79a83f42745449910b1733a8496c7",

+ "36f85ae64a1e440b8b15d0c5625bd23f",

+ "fd3643434d0f477f90a59dc8e0bf64f2"

+ ]

+ },

+ "id": "LjY75GoYUCB8",

+ "outputId": "35602bba-6cdf-4cb5-ac87-967679f10350"

+ },

+ "outputs": [

+ {

+ "data": {

+ "application/vnd.google.colaboratory.intrinsic+json": {

+ "type": "string"

+ },

+ "text/plain": [

+ "'Below is an instruction that describes a task, paired with a question that provides further context. \\nWrite a response that appropriately answer the question. \\nBefore answering, think carefully but concisely about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.\\n\\n### Instruction:\\nYou are an expert programmer with advanced knowledge in Python. Your task is to provide concise and easy-to-understand solutions. Please answer the following python question. \\n\\n### Question:\\nHow can I solve a Rubik\\'s Cube? \\n\\n\\n\\n### Response:\\nOkay, so I want to solve a Rubik\\'s Cube, but I\\'m not really sure where to start. I remember seeing people solve them quickly, but it looks so complicated. Let me try to break it down.\\n\\nFirst, I think I need to understand the structure of the cube. It has six faces, each with nine smaller squares of different colors. The goal is to have each face all one color. But how do the pieces move?\\n\\nI\\'ve heard about algorithms before. Maybe that\\'s the key. I guess algorithms are specific sequences of moves that solve certain parts of the cube. But I\\'m not sure which ones to use or in what order.\\n\\nI remember someone mentioning something called the cross. That\\'s probably the first step. So, I should start by making a cross on one face. Let\\'s pick the white face for the cross. I need to get all the white edges aligned with their corresponding center colors.\\n\\nOnce the cross is done, the next step might be to solve the corners of that face. Each corner has three colors, so I need to make sure they match the adjacent center colors. Maybe there\\'s a specific algorithm for inserting the corners correctly without messing up the cross.\\n\\nAfter the first layer is done, I think the next part is the middle layer. This involves moving the edge pieces between the first and last layers. I\\'ve heard terms like F, R, U, etc., which stand for front, right, up, etc. Maybe the algorithm for the middle layer involves moving a piece from the top to the correct position.\\n\\nThen comes the last layer, which is the trickiest part. I think this involves orienting the edges so they all face the right way, and then permuting the corners. I remember something about the \"OLL\" and \"PLL\" steps. OLL is orienting the last layer, and PLL is permuting it. There are a lot of algorithms for each, so it\\'s important to learn the common ones first.\\n\\nI\\'m a bit confused about how to recognize when to use each algorithm. Maybe I should start with the most common ones, like the cross, then F2L, OLL, and PLL. Each step builds on the previous one, so I shouldn\\'t skip ahead.\\n\\nI also wonder about the notation. F means moving the front face clockwise, F\\' is counterclockwise, and F2 is turning it twice. Understanding this notation is crucial for following the algorithms.\\n\\nI should probably practice each step separately. Start with solving the cross, then move on to the corners, and so on. It might take a while to get each step right, but with practice, it should become easier.\\n\\nWait, what if I get stuck? Maybe I should look up some tutorials or guides that break it down step by step. There are probably detailed explanations and videos that can help me visualize the moves better.\\n\\nIn summary, solving a Rubik\\'s Cube seems to involve a series of structured steps, each with specific algorithms. I need to learn each step, practice the moves, and gradually build up to solving the entire cube. It might be challenging, but breaking it down into smaller parts makes it manageable.\\n\\n\\nTo solve a Rubik\\'s Cube, follow this structured approach:\\n\\n1. **Understand the Structure**: Familiarize yourself with the cube\\'s layout, noting that each face has nine smaller squares and six faces in total.\\n\\n2. **Learn Notation**: Understand the basic move notations (F, R, U, etc.) and their directions (clockwise, counterclockwise, and 180-degree turns).\\n\\n3. **Step 1: Solve the Cross**:\\n - Begin with the white face.\\n - Align the white edges with their corresponding center colors.\\n\\n4. **Step 2: Solve the Corners**:\\n - Position the corners so they match adjacent center colors, using specific algorithms to insert them correctly without disrupting the cross.\\n\\n5. **Step 3: Solve the Middle Layer**:\\n - Move edge pieces between the first and last layers, using algorithms to place them correctly.\\n\\n6. **Step 4: Orient the Last Layer (OLL)**:\\n - Use algorithms to orient the last layer\\'s edges so they all face the correct way.\\n\\n7. **Step 5: Permute the Last Layer (PLL)**:\\n - Apply algorithms to permute the corners, ensuring they are in the correct positions.\\n\\n8. **Practice and Resources**:\\n - Practice each step separately, gradually building up skills.\\n - Use tutorials or guides for detailed explanations and visual aids.\\n\\nBy following these steps and practicing each algorithm, you can systematically solve the Rubik\\'s Cube.\\n<|end▁of▁sentence|>'"

+ ]

+ },

+ "execution_count": 8,

+ "metadata": {},

+ "output_type": "execute_result"

+ }

+ ],

+ "source": [

+ "# Prepare the dataset\n",

+ "\n",

+ "prompt_style = \"\"\"Below is an instruction that describes a task, paired with a question that provides further context.\n",

+ "Write a response that appropriately answer the question.\n",

+ "Before answering, think carefully but concisely about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.\n",

+ "\n",

+ "### Instruction:\n",

+ "You are an expert programmer with advanced knowledge in Python. Your task is to provide concise and easy-to-understand solutions. Please answer the following python question.\n",

+ "\n",

+ "### Question:\n",

+ "{}\n",

+ "\n",

+ "### Response:\n",

+ "{}\n",

+ "\"\"\"\n",

+ "\n",

+ "EOS_TOKEN = tokenizer.eos_token\n",

+ "\n",

+ "\n",

+ "def formatting_prompts_func(examples):\n",

+ " prompts = examples[\"prompt\"]\n",

+ " completions = examples[\"completion\"]\n",

+ " texts = []\n",

+ " for prompt,completion in zip(prompts, completions):\n",

+ " text = prompt_style.format(prompt, completion) + EOS_TOKEN\n",

+ " texts.append(text)\n",

+ " return {\n",

+ " \"text\": texts,\n",

+ " }\n",

+ "\n",

+ "\n",

+ "dataset = load_dataset(\"sdiazlor/python-reasoning-dataset\", split=\"train\")\n",

+ "dataset = dataset.map(formatting_prompts_func, batched = True,)\n",

+ "dataset[\"text\"][0]"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Train the Model"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 49,

+ "referenced_widgets": [

+ "e865b621b4704786998d9e5511bcb522",

+ "08220a32e37f4b1dab636ea06a786a1f",

+ "2a76087509dd482a81c297ba44e2ae2e",

+ "1b7f8aad80c0464b819ee74b7f76d40e",

+ "6849f7a48eff4ab8a7e01a3e26da7645",

+ "6b9552cc29ab4ac7936618bf1f0b1c5c",

+ "706d0954642a4ef5b8e3d4ece2fa556e",

+ "9eea1e4a483b4298a6ad67dfca0ff122",

+ "2f1ede630a654aeebe7d80640ee9674a",

+ "e411f85b5a0047c4b510565585215493",

+ "fb6566ed643a473e892e68b55fa3ef29"

+ ]

+ },

+ "id": "95_Nn-89DhsL",

+ "outputId": "9d245291-dc8e-48be-d05c-beeb65b3769f"

+ },

+ "outputs": [],

+ "source": [

+ "# Configure the trainer\n",

+ "trainer = SFTTrainer(\n",

+ " model=model,\n",

+ " tokenizer=tokenizer,\n",

+ " train_dataset=dataset,\n",

+ " dataset_text_field=\"text\",\n",

+ " max_seq_length=2048,\n",

+ " dataset_num_proc=2,\n",

+ " packing=False,\n",

+ " args=TrainingArguments(\n",

+ " per_device_train_batch_size=2,\n",

+ " gradient_accumulation_steps=4,\n",

+ " warmup_steps=5,\n",

+ " num_train_epochs=3,\n",

+ " learning_rate=2e-4,\n",

+ " fp16=not is_bfloat16_supported(),\n",

+ " bf16=is_bfloat16_supported(),\n",

+ " logging_steps=1,\n",

+ " optim=\"adamw_8bit\",\n",

+ " weight_decay=0.01,\n",

+ " lr_scheduler_type=\"linear\",\n",

+ " seed=3407,\n",

+ " output_dir=\"outputs\",\n",

+ " report_to=\"none\",\n",

+ " ),\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 1000

+ },

+ "id": "yqxqAZ7KJ4oL",

+ "outputId": "bb329be2-eced-4843-cb86-00ed8395e992"

+ },

+ "outputs": [

+ {

+ "name": "stderr",

+ "output_type": "stream",

+ "text": [

+ "==((====))== Unsloth - 2x faster free finetuning | Num GPUs = 1\n",

+ " \\\\ /| Num examples = 500 | Num Epochs = 3\n",

+ "O^O/ \\_/ \\ Batch size per device = 2 | Gradient Accumulation steps = 4\n",

+ "\\ / Total batch size = 8 | Total steps = 186\n",

+ " \"-____-\" Number of trainable parameters = 18,464,768\n"

+ ]

+ },

+ {

+ "data": {

+ "text/html": [

+ "\n",

+ " \n",

+ " \n",

+ "

\n",

+ " [186/186 17:48, Epoch 2/3]\n",

+ "

\n",

+ " \n",

+ " \n",

+ " Step \n",

+ " Training Loss \n",

+ " \n",

+ " \n",

+ " \n",

+ " \n",

+ " 1 \n",

+ " 0.828400 \n",

+ " \n",

+ " \n",

+ " 2 \n",

+ " 0.784400 \n",

+ " \n",

+ " \n",

+ " 3 \n",

+ " 0.838800 \n",

+ " \n",

+ " \n",

+ " 4 \n",

+ " 0.849500 \n",

+ " \n",

+ " \n",

+ " 5 \n",

+ " 0.732300 \n",

+ " \n",

+ " \n",

+ " 6 \n",

+ " 0.670100 \n",

+ " \n",

+ " \n",

+ " 7 \n",

+ " 0.709900 \n",

+ " \n",

+ " \n",

+ " 8 \n",

+ " 0.688500 \n",

+ " \n",

+ " \n",

+ " 9 \n",

+ " 0.613600 \n",

+ " \n",

+ " \n",

+ " 10 \n",

+ " 0.626500 \n",

+ " \n",

+ " \n",

+ " 11 \n",

+ " 0.729400 \n",

+ " \n",

+ " \n",

+ " 12 \n",

+ " 0.682100 \n",

+ " \n",

+ " \n",

+ " 13 \n",

+ " 0.540100 \n",

+ " \n",

+ " \n",

+ " 14 \n",

+ " 0.591000 \n",

+ " \n",

+ " \n",

+ " 15 \n",

+ " 0.604800 \n",

+ " \n",

+ " \n",

+ " 16 \n",

+ " 0.611600 \n",

+ " \n",

+ " \n",

+ " 17 \n",

+ " 0.604000 \n",

+ " \n",

+ " \n",

+ " 18 \n",

+ " 0.617800 \n",

+ " \n",

+ " \n",

+ " 19 \n",

+ " 0.610100 \n",

+ " \n",

+ " \n",

+ " 20 \n",

+ " 0.651400 \n",

+ " \n",

+ " \n",

+ " 21 \n",

+ " 0.580700 \n",

+ " \n",

+ " \n",

+ " 22 \n",

+ " 0.620100 \n",

+ " \n",

+ " \n",

+ " 23 \n",

+ " 0.664400 \n",

+ " \n",

+ " \n",

+ " 24 \n",

+ " 0.675600 \n",

+ " \n",

+ " \n",

+ " 25 \n",

+ " 0.513200 \n",

+ " \n",

+ " \n",

+ " 26 \n",

+ " 0.498600 \n",

+ " \n",

+ " \n",

+ " 27 \n",

+ " 0.646800 \n",

+ " \n",

+ " \n",

+ " 28 \n",

+ " 0.501700 \n",

+ " \n",

+ " \n",

+ " 29 \n",

+ " 0.537800 \n",

+ " \n",

+ " \n",

+ " 30 \n",

+ " 0.592300 \n",

+ " \n",

+ " \n",

+ " 31 \n",

+ " 0.488400 \n",

+ " \n",

+ " \n",

+ " 32 \n",

+ " 0.533100 \n",

+ " \n",

+ " \n",

+ " 33 \n",

+ " 0.567700 \n",

+ " \n",

+ " \n",

+ " 34 \n",

+ " 0.565900 \n",

+ " \n",

+ " \n",

+ " 35 \n",

+ " 0.638700 \n",

+ " \n",

+ " \n",

+ " 36 \n",

+ " 0.564400 \n",

+ " \n",

+ " \n",

+ " 37 \n",

+ " 0.479600 \n",

+ " \n",

+ " \n",

+ " 38 \n",

+ " 0.644000 \n",

+ " \n",

+ " \n",

+ " 39 \n",

+ " 0.486400 \n",

+ " \n",

+ " \n",

+ " 40 \n",

+ " 0.598800 \n",

+ " \n",

+ " \n",

+ " 41 \n",

+ " 0.595200 \n",

+ " \n",

+ " \n",

+ " 42 \n",

+ " 0.508000 \n",

+ " \n",

+ " \n",

+ " 43 \n",

+ " 0.504900 \n",

+ " \n",

+ " \n",

+ " 44 \n",

+ " 0.613700 \n",

+ " \n",

+ " \n",

+ " 45 \n",

+ " 0.517800 \n",

+ " \n",

+ " \n",

+ " 46 \n",

+ " 0.571700 \n",

+ " \n",

+ " \n",

+ " 47 \n",

+ " 0.568900 \n",

+ " \n",

+ " \n",

+ " 48 \n",

+ " 0.507400 \n",

+ " \n",

+ " \n",

+ " 49 \n",

+ " 0.536600 \n",

+ " \n",

+ " \n",

+ " 50 \n",

+ " 0.681900 \n",

+ " \n",

+ " \n",

+ " 51 \n",

+ " 0.469500 \n",

+ " \n",

+ " \n",

+ " 52 \n",

+ " 0.530200 \n",

+ " \n",

+ " \n",

+ " 53 \n",

+ " 0.601400 \n",

+ " \n",

+ " \n",

+ " 54 \n",

+ " 0.531000 \n",

+ " \n",

+ " \n",

+ " 55 \n",

+ " 0.470400 \n",

+ " \n",

+ " \n",

+ " 56 \n",

+ " 0.535800 \n",

+ " \n",

+ " \n",

+ " 57 \n",

+ " 0.615800 \n",

+ " \n",

+ " \n",

+ " 58 \n",

+ " 0.557500 \n",

+ " \n",

+ " \n",

+ " 59 \n",

+ " 0.620600 \n",

+ " \n",

+ " \n",

+ " 60 \n",

+ " 0.497700 \n",

+ " \n",

+ " \n",

+ " 61 \n",

+ " 0.556100 \n",

+ " \n",

+ " \n",

+ " 62 \n",

+ " 0.561300 \n",

+ " \n",

+ " \n",

+ " 63 \n",

+ " 0.607200 \n",

+ " \n",

+ " \n",

+ " 64 \n",

+ " 0.556200 \n",

+ " \n",

+ " \n",

+ " 65 \n",

+ " 0.538400 \n",

+ " \n",

+ " \n",

+ " 66 \n",

+ " 0.529800 \n",

+ " \n",

+ " \n",

+ " 67 \n",

+ " 0.580100 \n",

+ " \n",

+ " \n",

+ " 68 \n",

+ " 0.573100 \n",

+ " \n",

+ " \n",

+ " 69 \n",

+ " 0.466100 \n",

+ " \n",

+ " \n",

+ " 70 \n",

+ " 0.498400 \n",

+ " \n",

+ " \n",

+ " 71 \n",

+ " 0.590800 \n",

+ " \n",

+ " \n",

+ " 72 \n",

+ " 0.632500 \n",

+ " \n",

+ " \n",

+ " 73 \n",

+ " 0.472400 \n",

+ " \n",

+ " \n",

+ " 74 \n",

+ " 0.523400 \n",

+ " \n",

+ " \n",

+ " 75 \n",

+ " 0.562500 \n",

+ " \n",

+ " \n",

+ " 76 \n",

+ " 0.552200 \n",

+ " \n",

+ " \n",

+ " 77 \n",

+ " 0.548400 \n",

+ " \n",

+ " \n",

+ " 78 \n",

+ " 0.523300 \n",

+ " \n",

+ " \n",

+ " 79 \n",

+ " 0.593300 \n",

+ " \n",

+ " \n",

+ " 80 \n",

+ " 0.483600 \n",

+ " \n",

+ " \n",

+ " 81 \n",

+ " 0.585400 \n",

+ " \n",

+ " \n",

+ " 82 \n",

+ " 0.554700 \n",

+ " \n",

+ " \n",

+ " 83 \n",

+ " 0.413900 \n",

+ " \n",

+ " \n",

+ " 84 \n",

+ " 0.589400 \n",

+ " \n",

+ " \n",

+ " 85 \n",

+ " 0.484100 \n",

+ " \n",

+ " \n",

+ " 86 \n",

+ " 0.461000 \n",

+ " \n",

+ " \n",

+ " 87 \n",

+ " 0.570700 \n",

+ " \n",

+ " \n",

+ " 88 \n",

+ " 0.545900 \n",

+ " \n",

+ " \n",

+ " 89 \n",

+ " 0.542300 \n",

+ " \n",

+ " \n",

+ " 90 \n",

+ " 0.502100 \n",

+ " \n",

+ " \n",

+ " 91 \n",

+ " 0.554100 \n",

+ " \n",

+ " \n",

+ " 92 \n",

+ " 0.554000 \n",

+ " \n",

+ " \n",

+ " 93 \n",

+ " 0.468700 \n",

+ " \n",

+ " \n",

+ " 94 \n",

+ " 0.535800 \n",

+ " \n",

+ " \n",

+ " 95 \n",

+ " 0.539100 \n",

+ " \n",

+ " \n",

+ " 96 \n",

+ " 0.479600 \n",

+ " \n",

+ " \n",

+ " 97 \n",

+ " 0.499100 \n",

+ " \n",

+ " \n",

+ " 98 \n",

+ " 0.518300 \n",

+ " \n",

+ " \n",

+ " 99 \n",

+ " 0.593800 \n",

+ " \n",

+ " \n",

+ " 100 \n",

+ " 0.573200 \n",

+ " \n",

+ " \n",

+ " 101 \n",

+ " 0.546400 \n",

+ " \n",

+ " \n",

+ " 102 \n",

+ " 0.599600 \n",

+ " \n",

+ " \n",

+ " 103 \n",

+ " 0.465200 \n",

+ " \n",

+ " \n",

+ " 104 \n",

+ " 0.472400 \n",

+ " \n",

+ " \n",

+ " 105 \n",

+ " 0.556100 \n",

+ " \n",

+ " \n",

+ " 106 \n",

+ " 0.498800 \n",

+ " \n",

+ " \n",

+ " 107 \n",

+ " 0.486900 \n",

+ " \n",

+ " \n",

+ " 108 \n",

+ " 0.529000 \n",

+ " \n",

+ " \n",

+ " 109 \n",

+ " 0.480100 \n",

+ " \n",

+ " \n",

+ " 110 \n",

+ " 0.525900 \n",

+ " \n",

+ " \n",

+ " 111 \n",

+ " 0.489700 \n",

+ " \n",

+ " \n",

+ " 112 \n",

+ " 0.510600 \n",

+ " \n",

+ " \n",

+ " 113 \n",

+ " 0.628300 \n",

+ " \n",

+ " \n",

+ " 114 \n",

+ " 0.413200 \n",

+ " \n",

+ " \n",

+ " 115 \n",

+ " 0.577800 \n",

+ " \n",

+ " \n",

+ " 116 \n",

+ " 0.515000 \n",

+ " \n",

+ " \n",

+ " 117 \n",

+ " 0.539300 \n",

+ " \n",

+ " \n",

+ " 118 \n",

+ " 0.459200 \n",

+ " \n",

+ " \n",

+ " 119 \n",

+ " 0.533700 \n",

+ " \n",

+ " \n",

+ " 120 \n",

+ " 0.501700 \n",

+ " \n",

+ " \n",

+ " 121 \n",

+ " 0.528400 \n",

+ " \n",

+ " \n",

+ " 122 \n",

+ " 0.475900 \n",

+ " \n",

+ " \n",

+ " 123 \n",

+ " 0.437600 \n",

+ " \n",

+ " \n",

+ " 124 \n",

+ " 0.551700 \n",

+ " \n",

+ " \n",

+ " 125 \n",

+ " 0.464600 \n",

+ " \n",

+ " \n",

+ " 126 \n",

+ " 0.442300 \n",

+ " \n",

+ " \n",

+ " 127 \n",

+ " 0.611100 \n",

+ " \n",

+ " \n",

+ " 128 \n",

+ " 0.425300 \n",

+ " \n",

+ " \n",

+ " 129 \n",

+ " 0.516900 \n",

+ " \n",

+ " \n",

+ " 130 \n",

+ " 0.469100 \n",

+ " \n",

+ " \n",

+ " 131 \n",

+ " 0.486200 \n",

+ " \n",

+ " \n",

+ " 132 \n",

+ " 0.492100 \n",

+ " \n",

+ " \n",

+ " 133 \n",

+ " 0.511100 \n",

+ " \n",

+ " \n",

+ " 134 \n",

+ " 0.559500 \n",

+ " \n",

+ " \n",

+ " 135 \n",

+ " 0.537600 \n",

+ " \n",

+ " \n",

+ " 136 \n",

+ " 0.426800 \n",

+ " \n",

+ " \n",

+ " 137 \n",

+ " 0.474200 \n",

+ " \n",

+ " \n",

+ " 138 \n",

+ " 0.543500 \n",

+ " \n",

+ " \n",

+ " 139 \n",

+ " 0.539800 \n",

+ " \n",

+ " \n",

+ " 140 \n",

+ " 0.481500 \n",

+ " \n",

+ " \n",

+ " 141 \n",

+ " 0.481400 \n",

+ " \n",

+ " \n",

+ " 142 \n",

+ " 0.562000 \n",

+ " \n",

+ " \n",

+ " 143 \n",

+ " 0.409100 \n",

+ " \n",

+ " \n",

+ " 144 \n",

+ " 0.440900 \n",

+ " \n",

+ " \n",

+ " 145 \n",

+ " 0.437700 \n",

+ " \n",

+ " \n",

+ " 146 \n",

+ " 0.427300 \n",

+ " \n",

+ " \n",

+ " 147 \n",

+ " 0.393100 \n",

+ " \n",

+ " \n",

+ " 148 \n",

+ " 0.480300 \n",

+ " \n",

+ " \n",

+ " 149 \n",

+ " 0.509300 \n",

+ " \n",

+ " \n",

+ " 150 \n",

+ " 0.450200 \n",

+ " \n",

+ " \n",

+ " 151 \n",

+ " 0.530500 \n",

+ " \n",

+ " \n",

+ " 152 \n",

+ " 0.475300 \n",

+ " \n",

+ " \n",

+ " 153 \n",

+ " 0.521300 \n",

+ " \n",

+ " \n",

+ " 154 \n",

+ " 0.519500 \n",

+ " \n",

+ " \n",

+ " 155 \n",

+ " 0.539400 \n",

+ " \n",

+ " \n",

+ " 156 \n",

+ " 0.433300 \n",

+ " \n",

+ " \n",

+ " 157 \n",

+ " 0.495400 \n",

+ " \n",

+ " \n",

+ " 158 \n",

+ " 0.415200 \n",

+ " \n",

+ " \n",

+ " 159 \n",

+ " 0.608800 \n",

+ " \n",

+ " \n",

+ " 160 \n",

+ " 0.524700 \n",

+ " \n",

+ " \n",

+ " 161 \n",

+ " 0.438700 \n",

+ " \n",

+ " \n",

+ " 162 \n",

+ " 0.504800 \n",

+ " \n",

+ " \n",

+ " 163 \n",

+ " 0.455700 \n",

+ " \n",

+ " \n",

+ " 164 \n",

+ " 0.455100 \n",

+ " \n",

+ " \n",

+ " 165 \n",

+ " 0.592300 \n",

+ " \n",

+ " \n",

+ " 166 \n",

+ " 0.565700 \n",

+ " \n",

+ " \n",

+ " 167 \n",

+ " 0.480800 \n",

+ " \n",

+ " \n",

+ " 168 \n",

+ " 0.546100 \n",

+ " \n",

+ " \n",

+ " 169 \n",

+ " 0.463100 \n",

+ " \n",

+ " \n",

+ " 170 \n",

+ " 0.573400 \n",

+ " \n",

+ " \n",

+ " 171 \n",

+ " 0.500700 \n",

+ " \n",

+ " \n",

+ " 172 \n",

+ " 0.516700 \n",

+ " \n",

+ " \n",

+ " 173 \n",

+ " 0.572000 \n",

+ " \n",

+ " \n",

+ " 174 \n",

+ " 0.411700 \n",

+ " \n",

+ " \n",

+ " 175 \n",

+ " 0.452700 \n",

+ " \n",

+ " \n",

+ " 176 \n",

+ " 0.424900 \n",

+ " \n",

+ " \n",

+ " 177 \n",

+ " 0.489200 \n",

+ " \n",

+ " \n",

+ " 178 \n",

+ " 0.574300 \n",

+ " \n",

+ " \n",

+ " 179 \n",

+ " 0.479700 \n",

+ " \n",

+ " \n",

+ " 180 \n",

+ " 0.487800 \n",

+ " \n",

+ " \n",

+ " 181 \n",

+ " 0.513700 \n",

+ " \n",

+ " \n",

+ " 182 \n",

+ " 0.492800 \n",

+ " \n",

+ " \n",

+ " 183 \n",

+ " 0.535100 \n",

+ " \n",

+ " \n",

+ " 184 \n",

+ " 0.501100 \n",

+ " \n",

+ " \n",

+ " 185 \n",

+ " 0.450400 \n",

+ " \n",

+ " \n",

+ " 186 \n",

+ " 0.484500 \n",

+ " \n",

+ " \n",

+ "

"

+ ],

+ "text/plain": [

+ ""

+ ]

+ },

+ "metadata": {},

+ "output_type": "display_data"

+ }

+ ],

+ "source": [

+ "# Train the model\n",

+ "trainer_stats = trainer.train()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 501,

+ "referenced_widgets": [

+ "75dc3defc03044d5a3d5327f982f4ad9",

+ "69a1c928a91b485e899218b4c48bbd0f",

+ "2cd1ab0231be43078bf2b0861c66756b",

+ "7d6ef80065f2457ca6a584dea0ee193a",

+ "8deac706db694fd9af003a52d9e65c18",

+ "d1fdaab3815b4beebd51e5240208c14c",

+ "0c677a8b3f234e0480995a3f3f511cfc",

+ "bf95bf76909b418db87bed807a8fcd2e",

+ "60362ddf26274bbda10f888cae24b527",

+ "cf64d3a6baf545bebc84c62b2863a2a3",

+ "a023e723ac13430087c8f019e7bd2946",

+ "37b277adce0f47f58ce4f153ebbf9885",

+ "ae4269c9c5434ee79eaa31d197e382c0",

+ "d7ef28d78e19450e82bd3e42a9fab928",

+ "0b0ce59c58f947c5ab6b456c4b2b8fd8",

+ "c05b434f65a04a07880f82d5dda991e3",

+ "aa8a0d882d1047e8a6a08b04df1214ea",

+ "344f6b55db5e43c7b98750e4cbf907d1",

+ "58aa7a36dbd14511a43268242bf3486c",

+ "9b0488d548f3446d8d20af6d3ac56739",

+ "2c966a7792594a9bace7cada70bc6e20",

+ "eb694d1a1a674598bccff290c5c96077",

+ "343312cb90c84c6bbfec606bed32765a",

+ "4cbc59fddff34f44a0e99e15ff1ae102",

+ "932c5742442b421389363d2e216626d7",

+ "f57fce9a80e549609474d84b7d935b33",

+ "53f6ce3049474fbda959fd52fe9b5890",

+ "71cc82a978664061b4778a73ac25afdb",

+ "d4f41624cbea494f803bd76cd78f315f",

+ "8f02d26e18614cd38e891d5ee019bc3d",

+ "fefdd42e29544fdba8ba1dd7f28cc85d",

+ "ca279528c62c41559fff7d150a229567",

+ "3abc1f45407c4c8c9c6b6d676246aa3b",

+ "7b9eed20c6554407afb846170e2d946d",

+ "27cacb0adaa944c19d80cd9cb0ddffa9",

+ "b5037ef5dcdc43a9850bb073addc947f",

+ "cc7ce94c244548c8954b35bb92936bdd",

+ "3bcfc01fccce49ef87f62b0046d54059",

+ "4be3ffa40ad14461adeb5bfafad806f6",

+ "b3f9eb630a6a4b88857e4d9e1869ffca",

+ "91a556fcd64843fe877a580eb3e21826",

+ "6d93c3a9f44342bdbf840b6ce3d04ab6",

+ "ea83b9bb0b134206a99628d3fe66503a",

+ "a018604c1f624fb49e1064696a5533fd",

+ "8b9066519c9546a5b8ad95b239828b89",

+ "9ec9215968024471a69fa7a9a62db7bb",

+ "901c6eca0c4a40e7a9ed9fa968874787",

+ "687438cfca3140d4bc132b6b91ab616c",

+ "c16a355a4e2443509f3362504882ab7c",

+ "0e372476bcc04cd5a89a7b0626742c7e",

+ "56aac0b6cad64e7a9d672583c633c8bf",

+ "f2da104837ae4a3289e24a1bd7de2415",

+ "95a67d05e14c40c2a5bdcb322bc61af8",

+ "f8d6262c216f4226b6d26ad9961b9622",

+ "00b6d832a0c546c2afb059bd29e54991",

+ "76af8b2071e8453794f432a7c0bf63d0",

+ "51730e19fe0c4ead871b9b6cf7895e05",

+ "2c1cdcb9a80446539b3e229c5cc8ce33",

+ "7b1889d55328460fbd9c348a2a72e3c8",

+ "8bb5bfadd1984e5a8c0b27dfbb16bf9a",

+ "624a5a04f510474e90ac9aaf7ed264b1",

+ "405277a1c6944a35b1cb082de3b9dbdb",

+ "32b541d4aa6d4dac8a9dc0e5cdf9ba44",

+ "2cf66ab1c0f34d71b90ca6ac10eec6d6",

+ "c9ab627f27d7456d9de249610ffef4a1",

+ "4b5fa3674a8f467aba1089a39e2978b5",

+ "1cc3ff808e1543eb9716deba509c37ee",

+ "426ba1f107354dc78ded5f1ba400895b",

+ "02ddf97d03404b92a09a0b6a869847d8",

+ "9b22f445c87a44f9afe39de4a20f08f4",

+ "9f197e2d7fb54261acbb2d1ddb8b6a30",

+ "6d9294f45ab14b139f968dae24663783",

+ "bd5608c4250a4d35aca16aebd1dba7f2",

+ "92e84bbf66f34be3aa0da2ff046daf94",

+ "75a890e9c93543eeb2917c165f0d5d20",

+ "3d4c73330cc44748a4525c7901a281a1",

+ "4df8ff8e37004b53adcb9a5b32c40fb2"

+ ]

+ },

+ "id": "WBeMl1mh1ArJ",

+ "outputId": "4e869fbe-da2b-417b-b1d3-78c913efc4cb"

+ },

+ "outputs": [],

+ "source": [

+ "# Save to the local directory and push it to the Hub\n",

+ "model.save_pretrained(MODEL_NAME)\n",

+ "tokenizer.save_pretrained(MODEL_NAME)\n",

+ "model.save_pretrained_merged(MODEL_NAME, tokenizer, save_method=\"merged_16bit\")\n",

+ "\n",

+ "fine_tuned_model = f\"{REPO_NAME}/{MODEL_NAME}\"\n",

+ "model.push_to_hub(fine_tuned_model, safe_serialization=None)\n",

+ "tokenizer.push_to_hub(fine_tuned_model, safe_serialization=None)\n",

+ "model.push_to_hub_merged(fine_tuned_model, tokenizer, save_method=\"merged_16bit\") # for vLLM\n",

+ "model.push_to_hub_gguf(\n",

+ " f\"{fine_tuned_model}_q4_k_m\", tokenizer, quantization_method=\"q4_k_m\"\n",

+ ") # as gguf"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {},

+ "source": [

+ "## Run Inference"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "kR3gIAX-SM2q",

+ "outputId": "a8416e33-b27f-4056-8886-ddfec6c891d9"

+ },

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Okay, so I need to find all the prime numbers between 0 and 125. Hmm, primes are numbers greater than 1 that have no divisors other than 1 and themselves. So, first, I should probably start by listing all numbers from 0 to 125 and then eliminate the non-primes.\n",

+ "\n",

+ "Wait, but 0 and 1 aren't primes. So I can ignore them. The smallest prime is 2. So maybe I should start checking from 2 onwards.\n",

+ "\n",

+ "I remember that one method to check for primes is the Sieve of Eratosthenes. That's an efficient algorithm for finding all primes up to a certain limit. Let me think about how that works. The idea is to create a list of all numbers up to the limit and then iteratively mark the multiples of each prime starting from 2. The numbers that remain unmarked are primes.\n",

+ "\n",

+ "So, applying this to the range 0-125. I'll create a list of booleans from 0 to 125, initializing them all to True except index 0 and 1 which are False. Then, for each number starting from 2, if it's still marked as prime, I'll mark all its multiples as not prime.\n",

+ "\n",

+ "Let me outline the steps:\n",

+ "\n",

+ "1. Create a list of size 126 (since it's up to 125) and initialize all to True.\n",

+ "2. Set the indices 0 and 1 to False.\n",

+ "3. For each number i starting from 2 up to the square root of 125 (which is around 11.18), if the number is still True, mark all multiples of i as False.\n",

+ "4. After processing, the indices that are still True are primes.\n",

+ "\n",

+ "Wait, but the square root is a bit more precise. The Sieve of Eratosthenes only needs to check up to the square root of the limit because any factor larger than that would have a corresponding factor smaller than the square root.\n",

+ "\n",

+ "So, for 125, the square root is about 11.18, so I'll loop i from 2 to 11.\n",

+ "\n",

+ "Let me try to simulate this:\n",

+ "\n",

+ "- Start with all True except 0 and 1.\n",

+ "- i=2: mark multiples 4,6,8,... up to 124.\n",

+ "- i=3: mark multiples 9,12,15,... up to 123.\n",

+ "- i=4: already marked, so skip.\n",

+ "- i=5: mark 25,30, etc., but since 5 is a prime, it should only mark multiples beyond 25.\n",

+ "- And so on until i=11.\n",

+ "\n",

+ "After this, the indices that are True are the primes.\n",

+ "\n",

+ "Once I have the list of primes, I can collect them into a list and return that list.\n",

+ "\n",

+ "So, putting this into Python code, I'll create the sieve, then extract the primes.\n",

+ "\n",

+ "Let me think about how to implement this. I'll write a function called get_primes_up_to_n(n) which returns the list of primes up to n.\n",

+ "\n",

+ "Wait, but the user wants primes from 0 to 125. So the function should handle that.\n",

+ "\n",

+ "Alternatively, I can create a function that takes a number and returns the primes up to that number.\n",

+ "\n",

+ "So, the steps in code:\n",

+ "\n",

+ "1. Define a function get_primes(n) that returns primes up to n.\n",

+ "2. Inside the function, create a list of booleans from 0 to n, initializing to True, then setting 0 and 1 to False.\n",

+ "3. For each i from 2 to sqrt(n) + 1, check if it's still True. If so, mark all multiples of i as False.\n",

+ "4. Collect all indices that are still True and return them as a list.\n",

+ "\n",

+ "Wait, but in Python, the square root can be calculated using math.sqrt, but since n is an integer, I should take the integer part. Or, better, loop up to int(math.sqrt(n)) + 1.\n",

+ "\n",

+ "Wait, no. The sieve only needs to check up to sqrt(n) because any composite number would have a factor less than or equal to sqrt(n). So for n=125, sqrt is ~11.18, so i should go up to 11.\n",

+ "\n",

+ "So, in code:\n",

+ "\n",

+ "import math\n",

+ "\n",

+ "def get_primes_up_to_n(n):\n",

+ " if n < 2:\n",

+ " return []\n",

+ " sieve = [True] * (n + 1)\n",

+ " sieve[0] = sieve[1] = False\n",

+ " for i in range(2, int(math.sqrt(n)) + 1):\n",

+ " if sieve[i]:\n",

+ " for j in range(i * i, n + 1, i):\n",

+ " sieve[j] = False\n",

+ " primes = [i for i, is_prime in enumerate(sieve) if is_prime]\n",

+ " return primes\n",

+ "\n",

+ "Then, call get_primes_up_to_n(125) to get the primes.\n",

+ "\n",

+ "Testing this function with n=125 should give me all primes from 2 up to 125.\n",

+ "\n",

+ "Let me see, for example, 2 is a prime, 3,5,7, etc., up to 125.\n",

+ "\n",

+ "I think this should work. So the code is straightforward.\n",

+ "\n",

+ "\n",

+ "To find all prime numbers from 0 to 125, we can use the Sieve of Eratosthenes algorithm, which efficiently identifies primes by marking non-prime numbers. Here's a concise Python solution:\n",

+ "\n",

+ "```python\n",

+ "import math\n",

+ "\n",

+ "def get_primes_up_to_n(n):\n",

+ " if n < 2:\n",

+ " return []\n",

+ " sieve = [True] * (n + 1)\n",

+ " sieve[0] = sieve[1] = False\n",

+ " for i in range(2, int(math.sqrt(n)) + 1):\n",

+ " if sieve[i]:\n",

+ " for j in range(i * i, n + 1, i):\n",

+ " sieve[j] = False\n",

+ " primes = [i for i, is_prime in enumerate(sieve) if is_prime]\n",

+ " return primes\n",

+ "\n",

+ "primes = get_primes_up_to_n(125)\n",

+ "print(primes)\n",

+ "```\n",

+ "\n",

+ "This code initializes a boolean list for numbers up to 125, marks non-primes, and returns the primes. The result is a list of primes from 2 to 125.\n",

+ "<|end▁of▁sentence|>\n"

+ ]

+ }

+ ],

+ "source": [

+ "# Run inference\n",

+ "question = \"How can I get the prime numbers from 0 to 125?\"\n",

+ "\n",

+ "FastLanguageModel.for_inference(model)\n",

+ "inputs = tokenizer([prompt_style.format(question, \"\")], return_tensors=\"pt\").to(\"cuda\")\n",

+ "\n",

+ "outputs = model.generate(\n",

+ " input_ids=inputs.input_ids,\n",

+ " attention_mask=inputs.attention_mask,\n",

+ " max_new_tokens=2048,\n",

+ " use_cache=True,\n",

+ ")\n",

+ "response = tokenizer.batch_decode(outputs)\n",

+ "print(response[0].split(\"### Response:\")[1])\n"

+ ]

+ }

+ ],

+ "metadata": {

+ "accelerator": "GPU",

+ "colab": {

+ "gpuType": "T4",

+ "provenance": []

+ },

+ "kernelspec": {

+ "display_name": "Python 3",

+ "name": "python3"

+ },

+ "language_info": {

+ "name": "python"

+ },

+ "widgets": {

+ "application/vnd.jupyter.widget-state+json": {

+ "007ba2f3f37c48f8a5e7e21a4a2e5a71": {

+ "model_module": "@jupyter-widgets/controls",

+ "model_module_version": "1.5.0",

+ "model_name": "DescriptionStyleModel",

+ "state": {

+ "_model_module": "@jupyter-widgets/controls",

+ "_model_module_version": "1.5.0",

+ "_model_name": "DescriptionStyleModel",

+ "_view_count": null,

+ "_view_module": "@jupyter-widgets/base",

+ "_view_module_version": "1.2.0",

+ "_view_name": "StyleView",

+ "description_width": ""

+ }

+ },

+ "00b6d832a0c546c2afb059bd29e54991": {

+ "model_module": "@jupyter-widgets/controls",

+ "model_module_version": "1.5.0",

+ "model_name": "DescriptionStyleModel",

+ "state": {

+ "_model_module": "@jupyter-widgets/controls",

+ "_model_module_version": "1.5.0",

+ "_model_name": "DescriptionStyleModel",

+ "_view_count": null,

+ "_view_module": "@jupyter-widgets/base",

+ "_view_module_version": "1.2.0",

+ "_view_name": "StyleView",

+ "description_width": ""

+ }

+ },

+ "0142b4aeab9b4d87819b86c26a908f7a": {

+ "model_module": "@jupyter-widgets/controls",

+ "model_module_version": "1.5.0",

+ "model_name": "DescriptionStyleModel",

+ "state": {

+ "_model_module": "@jupyter-widgets/controls",

+ "_model_module_version": "1.5.0",

+ "_model_name": "DescriptionStyleModel",

+ "_view_count": null,

+ "_view_module": "@jupyter-widgets/base",

+ "_view_module_version": "1.2.0",

+ "_view_name": "StyleView",

+ "description_width": ""

+ }

+ },

+ "02302f414c384422920e3e310f597063": {

+ "model_module": "@jupyter-widgets/base",

+ "model_module_version": "1.2.0",

+ "model_name": "LayoutModel",

+ "state": {